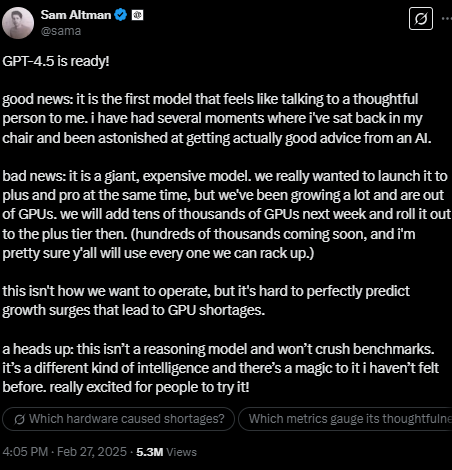

OpenAI’s highly anticipated GPT-4.5 model is facing a staggered rollout—not due to software limitations, but because the company is running out of GPUs, according to CEO Sam Altman.

In a post on X, Altman revealed that GPT-4.5, which he described as “giant” and “expensive,” will require tens of thousands more GPUs before OpenAI can expand access. Initially, the model will be available to ChatGPT Pro subscribers, with ChatGPT Plus users gaining access next week.

Why GPT-4.5 is so expensive

With its massive size and computational demands, GPT-4.5 comes at a steep cost. OpenAI has set pricing at:

- $75 per million input tokens (~750,000 words)

- $150 per million output tokens

That’s 30 times the input cost and 15 times the output cost of GPT-4o, OpenAI’s primary commercial model.

“We’ve been growing a lot and are out of GPUs,” Altman wrote. “We will add tens of thousands of GPUs next week and roll it out to the Plus tier then … This isn’t how we want to operate, but it’s hard to perfectly predict growth surges that lead to GPU shortages.”

OpenAI’s Long-Term Plan to Fix GPU Bottlenecks

This isn’t the first time Altman has pointed to computing constraints as a bottleneck for OpenAI’s growth. The company has been exploring solutions to ensure its models can scale, including:

- Developing custom AI chips to reduce reliance on external suppliers.

- Building an extensive network of data centers to increase computing power.

With AI demand surging and GPUs becoming a scarce resource, OpenAI’s ability to expand infrastructure will be crucial for its long-term dominance in the AI space, reports TechCrunch.