The AI lobbying game battle over AI regulation is heating up, and Big Tech is pouring millions into Washington to ensure that the rules favor their interests.

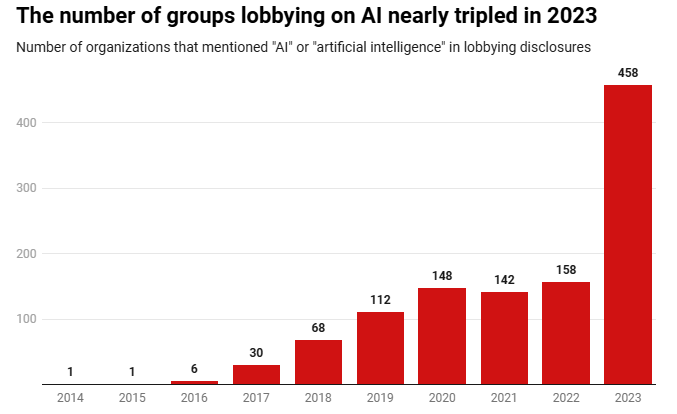

In just one year, the number of groups lobbying the U.S. government on AI nearly tripled, skyrocketing from 158 in 2022 to 451 in 2023, according to data from OpenSecrets, a nonprofit tracking lobbying and campaign finance.

While major tech firms publicly support AI regulation, behind closed doors, they push for more flexible and voluntary rules, according to congressional aides and AI policy experts.

The AI lobbying frenzy took off after OpenAI launched ChatGPT in November 2022. Just six months later, leading AI researchers and industry executives signed a high-profile warning, stating that AI extinction risks should be treated as seriously as pandemics and nuclear war.

Governments worldwide took action:

President Joe Biden signed an executive order on AI.

The European Union updated its AI Act to regulate models like ChatGPT.

The UK hosted the first AI Safety Summit, bringing world leaders together.

While the U.S. Congress has yet to pass an AI law, there’s been a surge in activity, with Senate Majority Leader Chuck Schumer launching “Insight Forums” to educate lawmakers on AI. The increasing likelihood of federal AI regulation triggered a flood of lobbyists into Capitol Hill, each representing different interests in the AI ecosystem.

“Congress has been writing AI bills for years. What’s new is the scale at which they’re doing it now,” said Divyansh Kaushik, VP at Beacon Global Strategies.

New Players in the AI Lobbying Game

Of the 451 organizations lobbying on AI in 2023, 334 were newcomers—many of them leading AI startups like OpenAI, Anthropic, and Cohere.

But they weren’t alone. Companies from industries far beyond tech—like Visa, GSK (pharmaceuticals), and Ernst & Young (finance)—started lobbying on AI for the first time. Even venture capital firms Andreessen Horowitz and Y Combinator joined the game.

Nonprofits also jumped into AI policy:

- Mozilla Foundation and Omidyar Network pushed for ethical AI.

- The NAACP and labor unions like the AFL-CIO focused on AI’s societal impact.

- AI safety-focused groups, like the Center for AI Policy and Center for AI Safety Action Fund, entered the conversation.

- Universities, including MIT and Yale, also began lobbying on AI issues.

This surge in AI lobbying demonstrates how rapidly AI has become a mainstream policy issue—affecting sectors far beyond Silicon Valley.

Big Tech’s Deep Pockets vs. AI Advocacy Groups

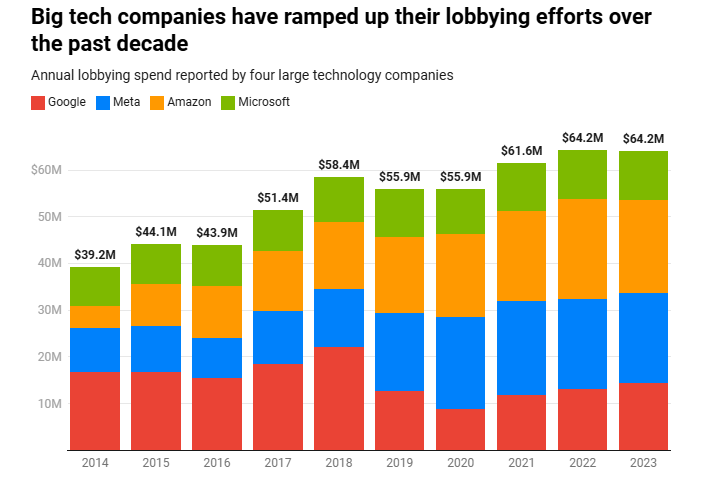

All lobbying organizations are required to disclose how much they spend, but it’s impossible to separate AI lobbying costs from other policy issues in these reports. That said, the financial gap between Big Tech and advocacy groups is staggering.

Here’s what some major players spent on lobbying in 2023:

- Amazon, Meta, Google (Alphabet), and Microsoft each spent over $10 million.

- The Information Technology Industry Council (a trade group) spent $2.7 million.

- Meanwhile, nonprofits like the Mozilla Foundation spent just $120,000, and the Center for AI Safety Action Fund spent $80,000.

“Tech companies are likely spending even more than reported, as official lobbying figures only account for direct conversations on specific laws,” says Hamza Chaudhry, U.S. Policy Specialist at the Future of Life Institute.

With AI regulation on the horizon, Big Tech is playing to win—shaping policies that will define the future of AI for years to come.

Public Statements vs. Private Lobbying: The Double Game of AI Regulation

When it comes to AI regulation, Big Tech talks one way in public and another behind closed doors.

Publicly, AI leaders—from OpenAI’s Sam Altman to Anthropic’s Dario Amodei—have repeatedly called for regulation, testifying in hearings and attending Insight Forums to discuss responsible AI oversight. Even Microsoft’s Brad Smith has supported a federal licensing system and a dedicated AI regulatory agency.

And let’s not forget those voluntary commitments signed by OpenAI, Google, Microsoft, and Anthropic at the White House’s AI Safety Summit—a grand display of cooperation to mitigate AI risks.

In private meetings with Congressional offices, tech companies are far less enthusiastic about strict AI oversight. According to multiple sources, their message in closed-door discussions leans toward voluntary or highly flexible regulations—anything that keeps government intervention to a minimum.

“Anytime you want to make a tech company do something mandatory, they’re gonna push back on it,” said one Congressional staffer familiar with these discussions.

And it’s not just startups. Some major industry voices outright reject AI regulation. In December 2023, Ben Horowitz, co-founder of Andreessen Horowitz, stated that his venture capital firm would support any political candidate opposing regulations that could “stifle innovation.”

In other words, when AI execs step onto a public stage, they embrace regulation—but when they step into a private meeting, the message shifts to flexibility, self-regulation, and keeping mandatory rules to a minimum.

With the presidential election approaching, time is running out for Congress to pass any AI-related legislation.

Some argue that lobbying—despite its corporate interests—can help shape smarter policies that balance national security, economic growth, and innovation. Others worry that tech companies are prioritizing profits over public safety and watering down potential AI laws before they even reach the voting stage.

One thing is clear: whether an AI bill passes now or in the next Congress, the lobbying war is only going to intensify as regulation moves closer to reality.